Load Balancing

Load balancing refers to distributing incoming traffic across a group of backend server. In order to serve a high volume of concurrent requests from users or clients, it is required to have a “traffic distributer” sitting in front of the backend servers, and routing requests across all workers capable of fulfilling those requests.

The load balancer is responsible for the following functions:

Distributes client requests or network load efficiently across multiple backend servers

Ensures high availability and reliability by sending requests only to servers that are online and that can handle the request in a timely manner

Provides the flexibility to scale-up or scale-down as demand dictates

In the context of the Object Storage, a Proxy Virtual Controller is referred to as a backend server.

How load is balanced in the Object Storage

The NextGen Object Storage load-balancer has two modes of operation:

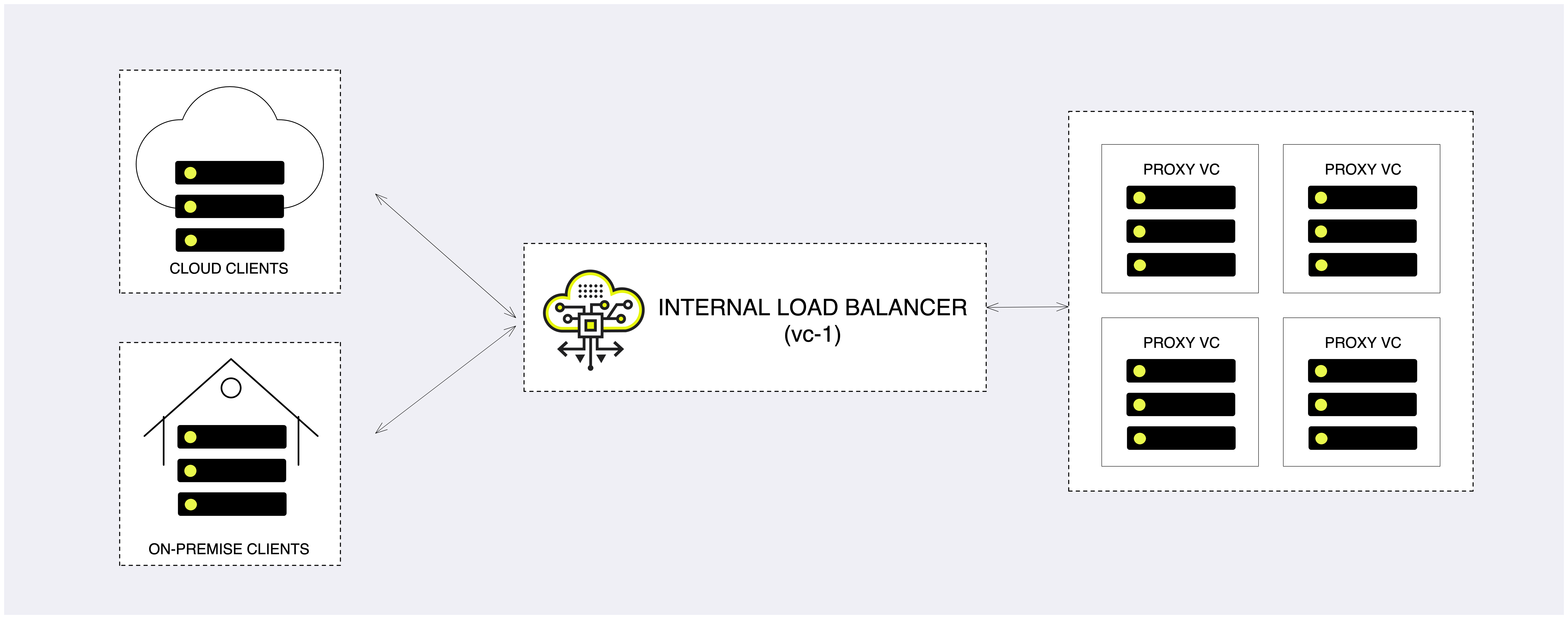

Internal load balancer (default)

Any NextGen Object Storage is provisioned with a built-in Load Balancer (referred to as Internal Load Balancer) solution which will work seamlessly out of the box. The internal load balancer is fully configured to provide a secured, reliable and highly-available endpoint. Upon provisioning a new Object Storage instance, the system will be have a globally unique FQDN matching the Object Storage front-end network, along with a matching well-known CA certificate that will be used for a secured communication over HTTPS.

In case a public IP/additional VNI (Virtual Network Interface) is allocated to the Object Storage, the internal load balancer will be used to distribute the workload. The main difference between Frontend network and Public/VNI handling is the load balancer mode of operation:

Frontend - DSR (Direct Server Return), packets from the Object Storage Virtual Controller bypass the Load Balancer, maximizing egress throughput.

Public/VNI - The load balancer will be used as a gateway for all traffic from/to the Object Storage Virtual Controller.

Load balancing algorithm

The load balancer is configured to use weighted least connection (wlc) - new connections go to the worker with the least number of connections. Reminder - Controller VCs and Storage VCs are excluded from handling client/users operations.

High availability

As with all other core services in the system, the internal load balancer is highly available. The internal load balancer service will be hosted in a Controller VC (vc-1) and in case of a failure will failover to vc-0 to ensure service continuity.

Load balancer metering

While the actual Object Storage operations will be distributed to the “backend servers”, in some cases the system administrator would like to review the amount of concurrent sessions their Object Storage is handling.

In the NextGen Object Storage the active session count can be reviewed in the management interface:

Virtual Controllers view - navigate to the Virtual Controller view and select the virtual controller with

vc-1as ID. In the south pane, select the Frontend Metering tab.Performance section - under the system section, navigate to the

Performancesection. Expand theVirtual Controller > Controller > vc-1view and drag and drop the Active Connections pane.

The Active Connections connections graph will provide a breakdown of

connections within the Object Storage (per VC). The user can toggle between

the graph view and a the table view using the TABLES view.

Using an external load balancer

Zadara’s Object Storage provides an easy way to integrate with an existing or newly provisioned software/hardware load balancer.

The main purpose is to expose the Object Storage S3 interface to external applications.

There are many load balancer solutions in the market. The procedures for setting them up are quite similar. This appendix gives an example of HAproxy, an open-source TCP/HTTP load-balancing proxy server available at www.haproxy.org

The following example sets up an external load balancer to terminate TLS connections and distribute them across all Proxy VCs.

This configuration provides secure and efficient traffic management for all NextGen Object Storage services.:

SSL Termination occurs on the external load balancer for both Object Operation APIs and GUI connections. This setup ensures centralized TLS handling.

However, authentication traffic is always terminated within the Object Storage system itself.

A custom TLS certificate installed on the load balancer is used to secure all TLS connections.

Object Operation connections are forwarded to the NextGen Object Storage proxy virtual controllers (VCs), with uneven traffic distribution. Proxy VCs handle the majority of the load, storage VCs carry less load, and HA VCs carry the least load.

The load balancer preserves client visibility by adding a header with the original client IP address, enabling accurate logging and traceability within the Object Storage proxies.

Continuous HTTP-based health checks are performed by the load balancer to monitor the availability of all proxy VCs.

Authentication connections are forwarded to the Object Storage floating IP using SSL pass-through, where TLS is terminated by the Object Storage system itself. A matching custom TLS certificate must also be uploaded there.

User Interface (GUI) traffic is redirected to the Object Storage floating IP over port 8443, providing secure access to the management interface.

A graphical statistics interface is enabled on the load balancer to monitor connection status, backend health, and traffic metrics.

Object Storage Settings

Set the internet-facing domain-name/IP of the external LoadBalancer as the NextGen Object Storage API Hostname/IP.

Upload your custom TLS certificate. It will be used for authentication connections. The certificate should match the custom domain name.

Set SSL Termination to “External”.

HAProxy Configuration

This example configuration was tested on HAProxy version 2.8.5-1ubuntu3.4 2025/10/01.

This configuration sets up HAProxy as a secure, multi-service reverse proxy and load balancer for Zadara’s Object Storageoperations, authentication, and GUI components.

It terminates TLS on the frontends, forwards requests to internal application nodes, performs health checks, and exposes HAProxy’s small management interface for monitoring.

A custom TLS certificate is required when TLS termination occurs in the HAProxy server. HTTP requests are sent to the Object Storage Proxy controllers.

In this configuration, the following addresses and roles are used:

IP / Port |

Purpose |

Traffic Type |

|---|---|---|

10.0.1.55 |

Primary HAProxy frontend node IP (the public or edge interface). |

All client connections terminate here. |

172.16.224.110 / 111 |

Internal backend servers (Object Storage VCs). |

HAProxy forwards requests here over HTTP. |

172.16.224.114 |

The Object Storage floating IP |

HAProxy forwards requests here over HTTP/TCP (authentication and UI operations). |

Frontends definitions

Frontend |

Bind / Port |

Mode |

Purpose / Function |

|---|---|---|---|

fe-object-operations |

10.0.1.55:443 |

HTTP (TLS-terminated) |

Handles Object Storage API

operations securely. Forwards to

|

fe-auth |

10.0.1.55:5000 |

TCP passthrough |

Forwards authentication traffic (non-HTTP) directly to backend at 172.16.224.114:5000. |

fe-gui |

10.0.1.55:8443 |

HTTP over TLS |

Serves the management GUI over

HTTPS. Adds forwarded headers

( |

Backends definitions

Backend |

Mode |

Members / IPs |

Function |

|---|---|---|---|

be-ngos-object- operations |

HTTP |

172.16.224.110:80, 172.16.224.111:80 |

Load balances object API requests between two

proxy nodes using round-robin. Performs

|

be-floating-ngos-gui |

HTTP |

172.16.224.114:8080 |

Routes GUI (web interface) requests to the floating GUI instance. |

be-floating-ngos-auth |

TCP |

172.16.224.114:5000 |

Passes raw TCP authentication traffic (no HTTP) to the floating auth node. |

Stats/Admin Interface

Listen section:

bind *:1936(should be restricted internally)Provides a small HTTP page for live stats and backend health.

Currently protected by basic auth (

zadara:zadara) — should be restricted or replaced with stronger credentials.

Misc Options

SSL termination: TLS handled at the frontend (cert:

/etc/ssl/private/zadara_custom.pem)Forwarded headers: Preserves client IP (

X-Forwarded-For) and scheme (X-Forwarded-Proto) for accurate logging and app behavior.Health checks: Each backend periodically checks

/healthcheckfor status 200; failed servers are marked DOWN automatically.Limits & tuning:

maxconn 2048, sensible timeouts (5s connect, 50s client/server).Security baseline: TLS1.2+ only.

Known Limitation

Currently, the GUI will not allow mixed HTTPS and HTTP content. Therefore, if the management interface is required for console operation, it must be exposed internally or used via an S3-compatible Object Storage browser/CLI tool.

Caution

Disclaimer

This configuration is provided as a sample reference only. It should be carefully reviewed, customized, and hardened according to your organization’s security policies, operational requirements, and infrastructure standards.

Before deploying to production, validate SSL/TLS settings, authentication methods, access controls, and monitoring parameters, to ensure compliance with your internal security and performance guidelines.

HAProxy configuration file

Configure /etc/haproxy/haproxy.cfg.

Example:

global

maxconn 2048

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

tune.ssl.default-dh-param 2048

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# SSL defaults (adjust to your policy)

ssl-default-bind-options no-sslv3 no-tlsv10 no-tlsv11

ssl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:ECDH+3DES:DH+3DES:RSA+AESGCM:RSA+AES:RSA+3DES:!aNULL:!MD5:!DSS

ssl-default-bind-ciphersuites TLS_AES_256_GCM_SHA384:TLS_AES_128_GCM_SHA256:TLS_CHACHA20_POLY1305_SHA256

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5s

timeout client 50s

timeout server 50s

option http-server-close

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

frontend fe-object-operations

bind 10.0.1.55:443 ssl crt /etc/ssl/private/zadara_custom.pem

mode http

option forwardfor if-none

http-request set-header X-Forwarded-Proto https

default_backend be-ngos-object-operations

frontend fe-auth

bind 10.0.1.55:5000

mode tcp

option tcplog

default_backend be-floating-ngos-auth

frontend fe-gui

bind 10.0.1.55:8443 ssl crt /etc/ssl/private/zadara_custom.pem

mode http

option forwardfor if-none

# Tell the app the original client details

http-request set-header X-Forwarded-For %[src]

http-request set-header X-Forwarded-Proto https

http-request set-header X-Forwarded-Host %[req.hdr(Host)]

http-request set-header X-Forwarded-Port %[dst_port]

# Some Rails setups also look at this:

http-request set-header X-Forwarded-Ssl on

default_backend be-floating-ngos-gui

backend be-floating-ngos-gui

mode http

option httpchk

http-check send meth HEAD uri / ver HTTP/1.0

http-check expect status 200

server ngos-floating 172.16.224.114:8080 check

backend be-ngos-object-operations

mode http

balance roundrobin

option forwardfor if-none

option httpchk

http-check send meth HEAD uri /healthcheck ver HTTP/1.0

http-check expect status 200

server ngos-proxy-01 172.16.224.110:80 weight 100 check

server ngos-proxy-02 172.16.224.111:80 weight 100 check

backend be-floating-ngos-auth

mode tcp

option tcp-check

server ngos-floating 172.16.224.114:5000 check

listen stats

bind *:1936

mode http

stats enable

stats uri /

# For stronger auth, replace with a userlist and 'stats auth user:pass' with a hashed password

stats auth zadara:zadara