Pools

Understanding Storage Pools

Storage Pools are virtual entities that manage storage provisioning from the aggregated capacity of one or more RAID Groups pooled into a single construct with some QoS attributes.

Volumes are thinly provisioned, allocating capacity from the Pool only when needed. The Pool has an underlying block virtualization layer which maps virtual address space to physically allocated Pool space and manages sharing of Pool physical chunks between Volumes, Snapshots and Clones.

Snapshots and Clones consume zero capacity when they are created because they share the same data chunks as the originating Volume. Anytime you actually modify the data in the Volume, or in one of the Clones, the data chunk is copied-on-write (COW) from the source in order to apply the new data write to a new Pool region without affecting the data set of any other objects that share the same data chunk.

The Pool’s attributes define the way Volumes, Snapshots and Clones are provisioned.

A single VPSA can have multiple storage pools based on the VPSA type and engine type according to the following specifications:

VPSA Storage Array - 3 Pools

VPSA Flash Array

H100 - 1 Pool

H200 - 2 Pools

H300 - 2 Pools

H400 - 2 Pools

Tiers

Scope: VPSA Flash Array

Note

From version 20.12, VPSA Flash Array supports a mixture of media types within one storage Pool, as tiers. Tiering optimizes costs, automating data storage placement by tracking segments, keeping high frequency activity on SSD storage and low frequency activity on HDD.

The high tier (also known as tier 0) is the more performant tier of the VPSA, and comprises in-array SSD/NVME drives.

The low tier (also known as tier 1) is the capacity-oriented tier of the VPSA. It can be implemented via in-array SATA/NLSAS drives, or by connectivity to a remote object storage container.

Supported configurations:

Tier 0 is SSD and Tier 1 (low tier) is HDD.

Tier 0 is SSD and Tier 1 (low tier) is Remote Object Storage.

Inline data reduction is supported irrespective of the actual data location. Data storage placement in the VPSA Pool is aligned dynamically, based on an internal heat index. Scanning, promotion and demotion of the storage location between tiers is embedded in the VPSA garbage collection cycle.

The VPSA tier manager keeps track of heat scores for the hottest LSA chunks in each tiered Pool.

The heat score is calculated according to:

Frequency of chunk read-write operations

Chunk deduplication references

The VPSA attempts to stabilize SSD utilization at a steady state around 80%.

SSD utilization |

Promotion and demotion considerations |

|---|---|

Below steady state |

All data is retained in SSD and no demotions take place. |

At stabilization target around steady state |

Placement considerations, promotions and demotions. |

Above steady state |

Demotions are increased and promotions are blocked. |

Chunk promotion: Chunks can be promoted to a higher tier:

By the low tier defragger

On host reads

By the tier manager, based on periodic assessing of chunks with highest heat scores

Chunk tier demotion: Chunks can be demoted to a lower tier:

By the SSD defragger

By the tier manager, when SSD utilization is at the steady state and higher

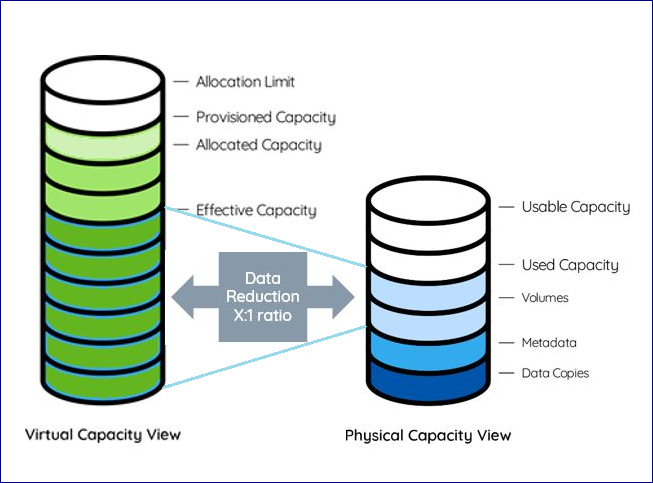

Understanding Pool’s Capacity

Scope: VPSA Flash Array

The introduction of data reduction makes the Pool capacity management more complex. Data reduction efficiency depends on the nature of the data, therefore it is harder to predict the drive capacity needed for each workload.

Capacity metrics to consider:

Physical View

Raw Capacity - Sum of all drives capacities in the Pool

Usable Capacity - Total capacity of all RAID Groups in the Pool

Note

the system keeps about 0.5% of each RAID Group capacity as its internal spare

Used by Volumes - Capacity used to store the Volumes’ data

Used by metadata - Capacity used to store the Pool’s metadata

Used by data copies: - Capacity used to store the Snapshots and Clones

Used Capacity - The total size of all data written in the Pool

Used Capacity = Used by Volumes + Used by metadata + Used by data copies

Free Capacity - Available Capacity in the Pool that can be used for new Data and Metadata writes

Free Capacity = Usable Capacity – Used Capacity

%Full = “Used Capacity” / “Usable Capacity”

Note

Capacity alerts are based on Free Capacity

Virtual View

Provisioned Capacity - Sum of Pool’s Volumes and Clones capacities as seen by the hosts

Allocated Capacity - Pool’s allocated address space of all Volumes, Snapshots and Clones

Allocation Limit - Max Capacity of the Pool’s address space. Depends on the Pool type.

Free Address Space = Allocation Limit – Allocated Capacity

Note

Address Space alerts are based on Free Address Space

Effective Capacity - Amount of data written in the Pool by all Volumes and can be accessed by hosts. Not including space taken by Snapshots

Data Reduction Saving

Thin Provision Ratio = Provisioned Capacity / Effective Capacity

Data Reduction Ratio = Effective Capacity / Used by Volumes

Data Reduction Saving = Effective Capacity - Used by Volumes

Data Reduction Percentage = 1- (1 / Data Reduction Ratio)

e.g.

Data reduction ratio 2:1 , Data Reduction Percentage 50%

Data reduction ratio 5:1 , Data Reduction Percentage 80%

Data reduction ratio 20:1 , Data Reduction Percentage 95%

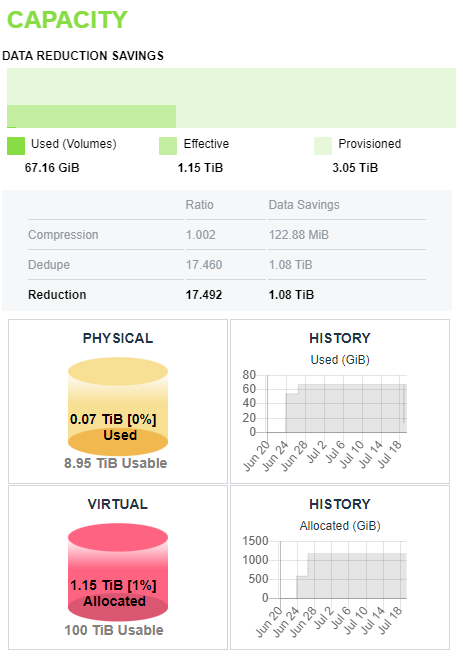

Pool Capacity Monitoring

The VPSA Flash Array Dashboard shows the capacity consumption and data reduction saving.

The upper bar shows the current capacity provisioned to the hosts by all Pools vs. the effective capacity written by the hosts vs. the physical space needed to store the data.

The lower chart shows trend of time of the physical capacity used and available.

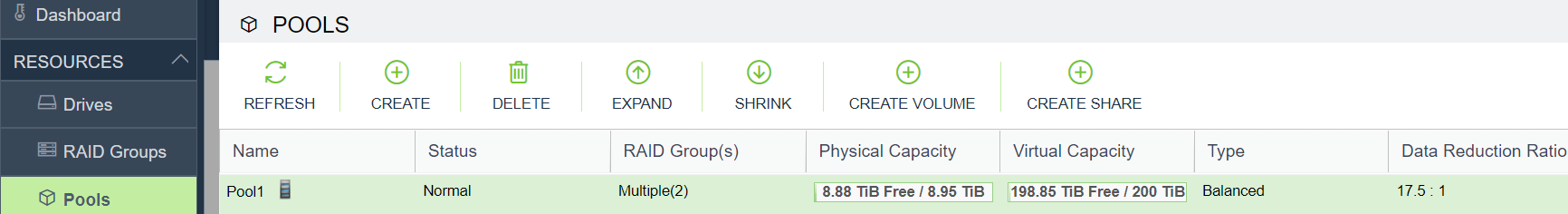

The Pools table on the Pools page shows 2 bars per Pool:

The Physical capacity bar shows the usable vs. used capacities.

The Virtual capacity bar shows the allocated capacity vs. the allocation limit.

Viewing the List of Pools

The Pools page displays a table listing the VPSA’s Pools in the upper part of the screen.

Both VPSA Storage Array Pools and VPSA Flash Array Pools have the following common columns:

Property |

Description |

|---|---|

Name |

User assigned name. Can be modified anytime. |

Status |

|

Raid Group(s) |

RAID Group name, or “Multiple (X)” where X denotes the number of RAID Groups in the Pool. |

Columns specific to VPSA Storage Array Pools:

Property

Description

Capacity

Total available capacity for user data and system metadata.

Type

Transactional Workloads

Repository Storage

Archival Storage

Cached

Yes/No – Indicates whether the Pool utilizes SSD for read/write caching.

Provisioned Capacity

Sum of Pool’s Volumes and Clones capacities as seen by the hosts.

Columns specific to VPSA Flash Array Pools:

Property

Description

Physical Capacity

The usable vs. used capacities in the Pool.

Virtual Capacity

The allocated capacity vs. the allocation limit in the Pool.

Type

IOPS-Optimized

Balanced

Throughput-Optimized

Data Reduction Ratio

Capacity savings by all data reduction techniques. Data Reduction Ratio = Effective Capacity / Used by Volumes

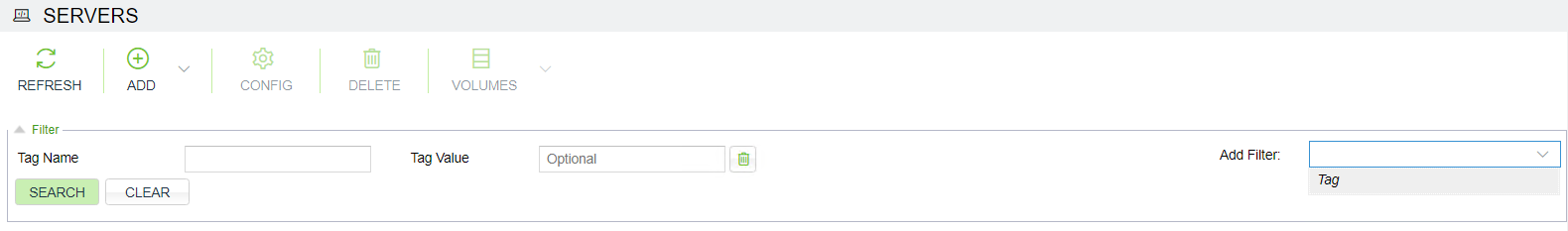

Filtering the List of Pools

To filter the list of Pools displayed in the center pane, you can use a predefined custom tag.

Expand the Filter control.

On the right, click the Add Filter dropdown, and select Tag. The tag input fields appear on the left.

The Tag filter requires input of the predefined custom Tag Name, and optionally, a Tag Value to further refine filtering for tags that have specific values for assigned Pools. Wildcards are not accepted. See the Tags tab section for configuring predefined custom tags.

Click Search to apply the filter.

To remove the filter, click Clear, or click the trash icon to the right of the filter. Click Search again to refresh the Volumes list.

Creating and Managing Pools

Creating a Pool

Note

By dafault when a a new VPSA is created, a default Pool is automatically created for each type of drive selected for this VPSA.

If the default Pool does not meet the needs, you can delete it and follow the process described here to create your own Pools.

To create a new Storage Pool press either the Create button on the page or the Create Pool button on the page. There are 2 methonds to create a Pool:

Create a Pool from RAID Groups

Create a Pool from drives, and the let the system automatically create the needed RAID Groups.

You can toggle between the two by clicking Use Drive Selection / Use RAID Group Selection at the lower left corner of the dialog

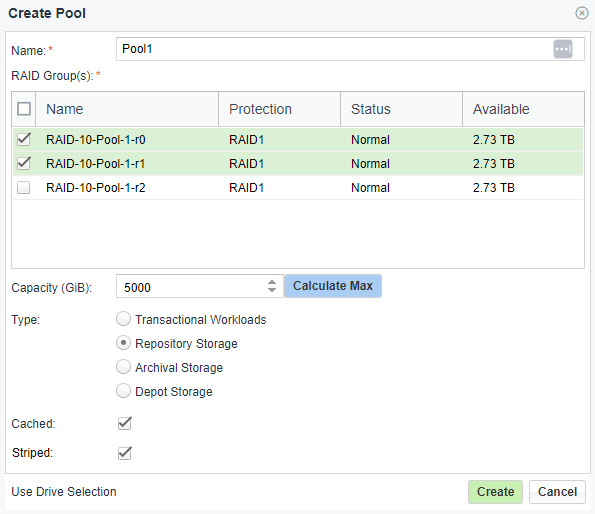

Create a Pool from RAID Groups

To create a Pool from RAID Groups, click the Create button on the Pools page. The Create Pool dialog opens. At the lower left, select Use RAID Group Selection from the toggle:

Select the Pool attributes:

Display Name – You can modify this anytime later.

RAID Group(s) selection – Check the box(es) of one or more RAID Groups from which protected storage capacity will be allocated for this Pool.

Capacity – The Pool’s physical capacity shown in GB. By default the capacity is the aggregated capacities of all the selected RAID Groups, but you do not have to allocate full RAID Groups. If you define a capacity smaller than is available in the selected RAID Groups the capacity will be evenly distributed between the RAID Groups.

Note

The actual usable capacity of the Pools is a little less than the requested size, as the system reserves some space for the Pool’s metadata (typically up to 100GB).

Type

VPSA Storage Array supports Transactional, Repository, Archive Pool and Depot Pool types.

VPSA Flash Array supports IOPS-Optimized, Balanced Pool and Throughput-Optimized Pool types.

These Pool types use different chunk sizes for the mapping of virtual LBAs to Physical Drive addresses.

The following tables describe the tradeoffs for each type and the recommended use cases:

VPSA Storage Array Pool types:

Transactional Pool

Repository Pool

Archive Pool

Depot Pool

Chunk size

256KB

1MB

2MB

4MB

Pros

Faster COW operation

Space efficiency on random writes to Snapshots

Smaller metadata size

Sequential workload performance is similar to transactional

Allows large Pools

Sequential workload performance is the same

Optimal capacity consolidation for backup and media storage

Cons

Increased metadata size

Slower COW operation

Less space efficient

Slower with frequent data modifications

Limited Snapshots frequency (1 hour min)

Slower for small block transactional workloads (optimal support for workloads with IO block size >=256KB)

Limited Snapshot frequency (1 per day with 30 days retention)

Less space-efficient for small files (recommended for file sizes >= 1MB)

Use case

Transactional workload with Snapshots

Repository type workload

Large Pools

Many Snapshots to keep

Relatively static data

Archive type workloads

Very large Pools/Volume (> 100TB)

Backup repositories

Media repositories

Large files archive

Maximum size

20TiB

100TiB

200TiB

500TiB

VPSA Flash Array Pool types:

IOPS-Optimized Pool

Balanced Pool

Throughput-Optimized Pool

Thin Provision Chunk size

1MB

2MB

4MB

Deduplication Chunk size

16KB

32KB

64KB

Pros

Smaller metadata size

Better deduplication

Lower COW overhead in cases of small block I/O

Allows large Pools

Better sequential workload throughput compared to IOPS-Optimized Pools

Better compression ratio compared to IOPS-Optimized Pools

Optimal capacity consolidation for backup and media storage

Better sequential workload throughput compared to other Pool types

Better compression ratio compared to other Pool types

Cons

Increased metadata size

Higher COW overhead for IOPS < 16KB in comparison to IOPS-Optimized Pools

Lower deduplication efficiency compared to IOPS-Optimized Pools

Higher COW overhead for IOPS < 32KB in comparison to other Pool types

Higher latency for small block writes < 64KB due to RMW

Less space-efficient for small files (recommended for file sizes >= 1MB)

Low deduplication efficiency (recommendation is to turn deduplication off)

Use Case

Analytics

Small block IOPS workloads

High IOPS

Database (OLTP)

Deduplication-friendly data

Pools/Volumes (> 100TB)

Archive type workloads

Workloads with average IO block size of 32KB

General purpose

File system

Relatively static data

Backup repositories

Media repositories

Large file archives

Archive type workloads

Sequential workloads auch as video streaming

Workloads with average IO block size > 128KB

Maximum size

100TiB

200TiB

500TiB

When there are a number of Pools in a given VPSA, there is a limit to the aggregated total size of all Pools.

The following table lists the maximimum capacity per Pool type in TB, per VPSA Flash Array engine:

Engine

H100

H200

H300

H400

IOPS-Optimized

60

100

100

100

Balanced

100

160

200

200

Throughput-Optimized

140

220

400

500

Note

In most cases, the maximimum provisioned capacity is the same as the maximum usable capacity for that engine and Pool type configuration.

An H400 engine with a Balanced Pool supports a maximum provisioned capacity of 250TB when the overall data reduction ratio for the array is 1:1.5 or higher.

Cached – Check this box to use SSD to Cache Server’s reads and writes.

All Pools that are marked as “Cached” share the VPSA Cache.

Flash cache usually improves the performance of Volumes based on HDD’s Pools. However it depends on the specific workload and the size of the cache vs. the size of the active data set.

If the Pool consists of SSD drives this option will be disabled.

Striped – This check box is enabled only when you select two or more RAID Groups. Striping over RAID-1 creates a RAID-10 configuration. Use striping to improve performance of random workloads, since the IOs will be distributed and all drives will share the workload.

VPSA Flash Array Pools are always striped, and the Striped check box is hidden.

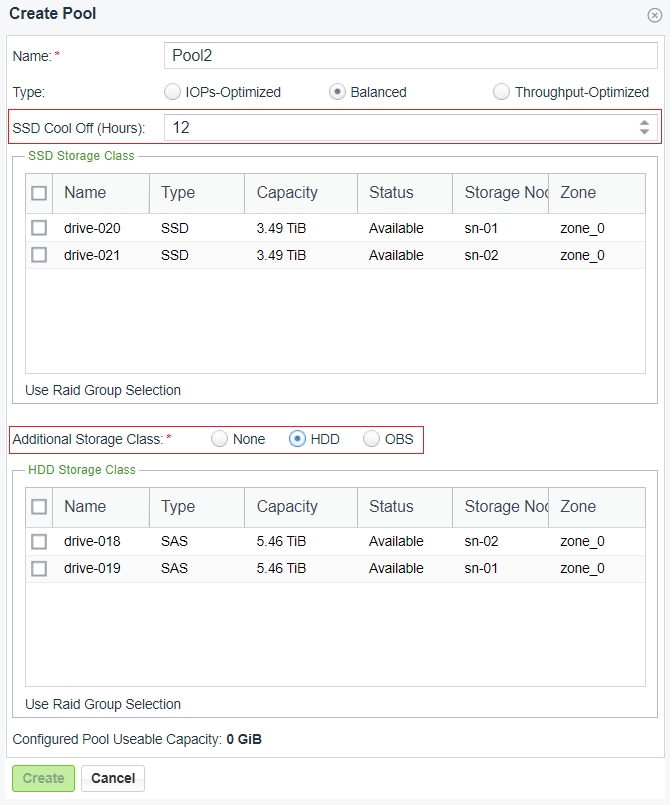

VPSA Flash Array configurations

Scope: VPSA Flash Array

Additional Storage Class: Adding a storage class defines a low tier for the Pool as HDD or remote Object Storage. Adding a storage class opens the SSD Cool Off configuration option.

SSD Cool Off: Set a goal for data retention in SSD with a value of 0 to 720 hours (30 days). The default is 0 (disabled). This hints to the system that within the cool-off period there will be a repeated access to a data chunk in the Pool. When SDD utilization is around the steady state, the tiering manager references the cool-off period definition in its decision to determine tier placement for the data chunk.

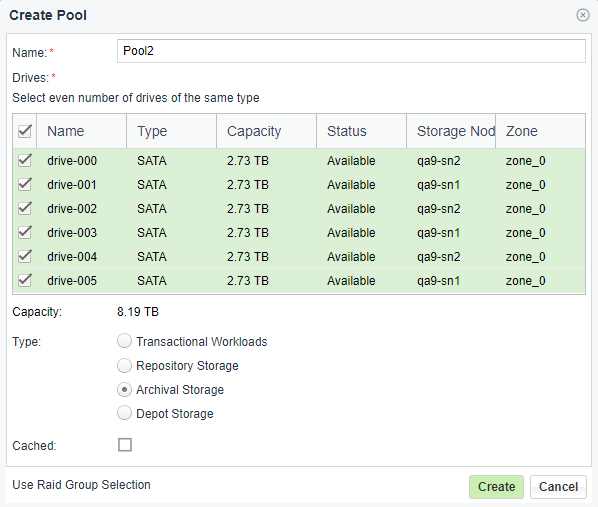

Create a Pool from Drives

To create a Pool from Drives, click the Create button on the Pools page. The Create Pool dialog opens. At the lower left, select Use Drive Selection from the toggle:

The parameters are the same as above. Check the boxes of drives that will be allocated for this Pool.

Expanding Pool Capacity

To Expand the Pool press the Expand button on the page.

You can use capacity from any RAID Group to expand a Pool.

Warning

If the RAID Group from which the new capacity is added doesn’t match the protection type or drive type of the existing capacity, a warning message displays, asking you to confirm the mismatch.

Keep in mind that continuing with the mismatched types may impact the Pool performance and protection QoS.

VPSA Flash Array configuration

Scope: VPSA Flash Array

Expand in Storage Class: Choose SSD or HDD to list the storage class resource details and availability for expansion.

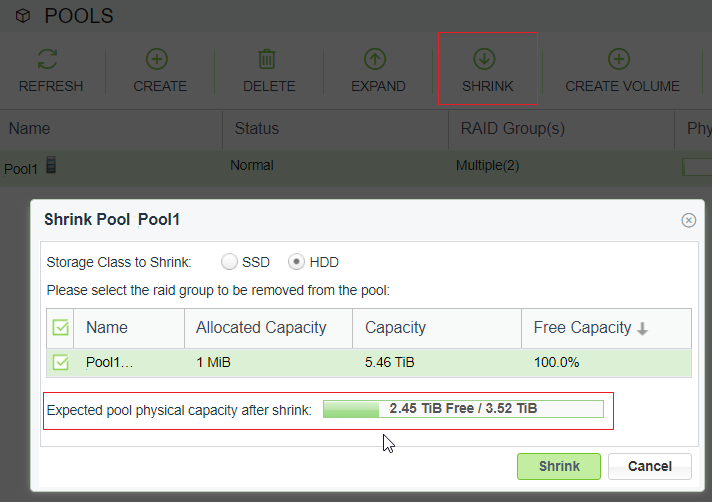

Shrinking Pool Capacity

Scope: VPSA Flash Array

Note

Pool shrink is only supported in VPSA Flash Array.

If the Pool capacity is not fully used you can shrink it’s size by removing one RAID Group at a time from the Pool. The VPSA will evacuate the selected RAID Group and will return the RAID Group to the VPSA for reuse, or for the RAID Group to be deleted and the drives removed from the VPSA. To Shrink the Pool press the Shrink button on the page.

Storage Class to Shrink: Choose SSD or HDD to list the storage class RAID Group details and availability for shrinkage.

Select the RAID Group to remove from the Pool. Check the physical size expected after the shrinking operation is completed, and press Shrink. The operation might take a while, depending on the amount of data to be copied to other drives. The system will generate an Event once done.

Caching

Scope: VPSA Storage Array

It is possible to enable Caching on non-cached Pools.

One use case for leveraging this capability is to enable caching only after the initial copy of the data into the VPSA. The initial copy typically generates a sequential write IO workload, where non-cached Pools are most efficient. Once the initial copy is completed enable caching on the Pool if you expect a more random type of IO workload.

Disabling SSD cache on a Pool

Scope: VPSA Storage Array

By default every Pool is cached by the VPSA’s SSD cache, but it is also possible to disable caching on cached Pools which will remove this feature. The Enable Cache/Disable Cache buttons toggle depending on the current caching state of the Pool.

Creating a Volume

A Volume can be created from the Create Volume button in the top menu in the Pools view or in the Volumes view. Both flows are identical.

See Creating and Deleting a Volume on the Managing Volumes, Snapshots and Clones page.

Creating a share

A NAS share can be created from the Create Share button in the top menu in the Pools view or in the Volumes view. Both flows are identical.

See Creating a NAS share on the Managing Volumes, Snapshots and Clones page.

Adding a tier

Scope: VPSA Flash Array

It is possible to add a low tier to an existing VPSA Flash Array Pool.

In the Pools view, search for the Pool in the Pool table, and mark it by clicking its row.

Click Create in the top menu. The Create Pool dialog opens.

Select the high tier from the list in the SSD Storage Class table.

Select the Additional Storage Class: Either HDD or OBS (remote Object Storage).

Depending on the selection, either the HDD Storage Class list or the OBS Storage Class list is displayed.

The SSD Cool Off field is also displayed.

Select the low tier from the HDD Storage Class or OBS Storage Class list.

Set the number of hours for SSD Cool Off, between 0 and 720 (30 days).

During the cool-off period there is repeated access to data chunks in the Pool. The tiering manager references the cool-off period definition to determine tier placement for data chunks.

Click Create.

The tier’s properties will be viewable in the Tiers tab.

Viewing Pool properties

The Pool’s details are shown in the following South Panel tabs:

Properties tab

VPSA Storage Array Pool properties

Scope: Scope: VPSA Storage Array

Each VPSA Storage Array Pool has the following properties:

Property |

Description |

|---|---|

ID |

An internally assigned unique ID. |

Name |

User assigned name. Can be modified anytime. |

Status |

|

Comment |

User free text comment. Can be used for labels, reminders or any other purpose. |

Type |

|

Mode |

|

Cached |

Yes/No – Indicates whether the Pool utilizes SSD for read/write caching. |

Cache COW Writes |

Yes/No – Indicates whether flash cache is used for internal Snapshots Copy-On-Write Operations. Enabled by default. Disabled only on rare cases where frequent Snapshots cause extreme load of metadata operations. Consult Zadara support. |

Raid Group(s) |

RAID Group name, or “Multiple (X)” where X denotes the number of RAID Groups in the Pool. |

Stripe Size |

Applicable only for Pools of Striped mode (i.e. when data is striped between 2 or more RAID Groups). The Stripe size is always 64KB. |

Created |

Date & time when the object was created. |

Modified |

Date & time when the object was last modified. |

Capacity |

Total available capacity for user data & system metadata. |

Available Capacity |

Available (free) capacity to be used for User data. VPSA reserves 2% of the total Pool capacity for system metadata. If the VPSA needs more capacity for the metadata (very rare scenario), it will be consumed from the available capacity. |

Metadata Capacity |

Capacity used by the metadata that is required for managing pool allocation space. |

Capacity State |

See Managing Pool Capacity Alerts for more details. |

VPSA Flash Array Pool properties

Scope: VPSA Flash Array

Each VPSA Flash Array Pool has the following properties:

Property |

Description |

|---|---|

General |

|

ID |

An internally assigned unique ID. |

Name |

User assigned name. Can be modified anytime. |

Status |

|

Comment |

User free text comment. Can be used for labels, reminders or any other purpose. |

Type |

|

Raid Group(s) |

RAID Group name, or “Multiple (X)” where X denotes the number of RAID Groups in the Pool. |

SSD Cool Off |

Period (hours) of repeated access to a data chunk in the Pool. Used for automatically determining tier placement for data chunks. |

Created |

Date & time when the object was created. |

Modified |

Date & time when the object was last modified. |

Physical Capacity |

|

Usable Capacity |

Total capacity of all RAID Groups in the Pool. |

Used Capacity |

The total size of all data written in the Pool Used Capacity = Used by Volumes + Used by metadata + Used by data copies |

Used by Volumes |

Capacity used to store the Volumes data. |

Used by Data Copies |

Capacity used to store Snapshots and Clones. |

Used by Metadata |

Capacity used to store the Pool’s metadata. |

Currently Inactive |

Capacity of the Pool’s data chunks that are currently not being accessed. |

Free Capacity |

Available Capacity in the Pool that can be used for new Data and Metadata writes. |

Physical Capacity State |

See Managing Pool Capacity Alerts for more details. |

Virtual Capacity |

|

Provisioned Capacity |

Sum of Pool’s Volumes and Clones capacities as seen by the hosts. |

Allocated Capacity |

Pool’s allocated address space of all Volumes, Snapshots and Clones. |

Pattern Capacity |

Capacity savings by data blocks with predefined patterns, such as all-zeroes. Blocks that match the patterns are deduped. |

Effective Capacity |

Amount of data written in the Pool by all Volumes and can be accessed by hosts. Not including capacity taken by Snapshots. |

Block-Virt Metadata Capacity |

Capacity used by the metadata that is required for managing pool allocation space. |

Free Virtual Capacity State |

See Managing Pool Capacity Alerts for more details. |

Capacity Savings |

|

Data Reduction Ratio |

Capacity savings by all data reduction techniques. Data Reduction Ratio = Effective Capacity / Capacity used by Volumes |

Dedupe Ratio |

Capacity savings by deduplication. |

Compression Ratio |

Capacity savings by compression. |

Thin Provision Ratio |

Capacity savings by thin provisioning technique. Thin Provision Ratio = Provisioned Capacity / Effective Capacity |

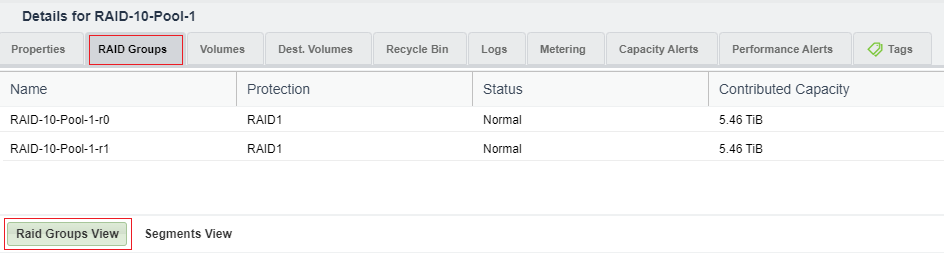

RAID Groups tab

Scope: VPSA Storage Array

The RAID Groups tab has 2 display styles to present the RAID Groups allocated to the selected Pool:

RAID Groups View

Click the RAID Groups View button to list the RAID Groups allocated to the selected Pool.

A table lists the following information for each RAID Group:

Name

Protection (RAID-1)

Status

Contributed Capacity

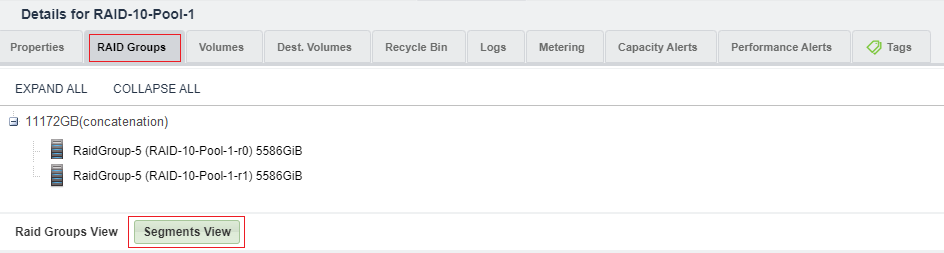

Segments View

Click the Segments View button to display the structure of a Pool made of concatinated or striped segments.

Click the Expand or Collapse controls to display the detail or summary levels.

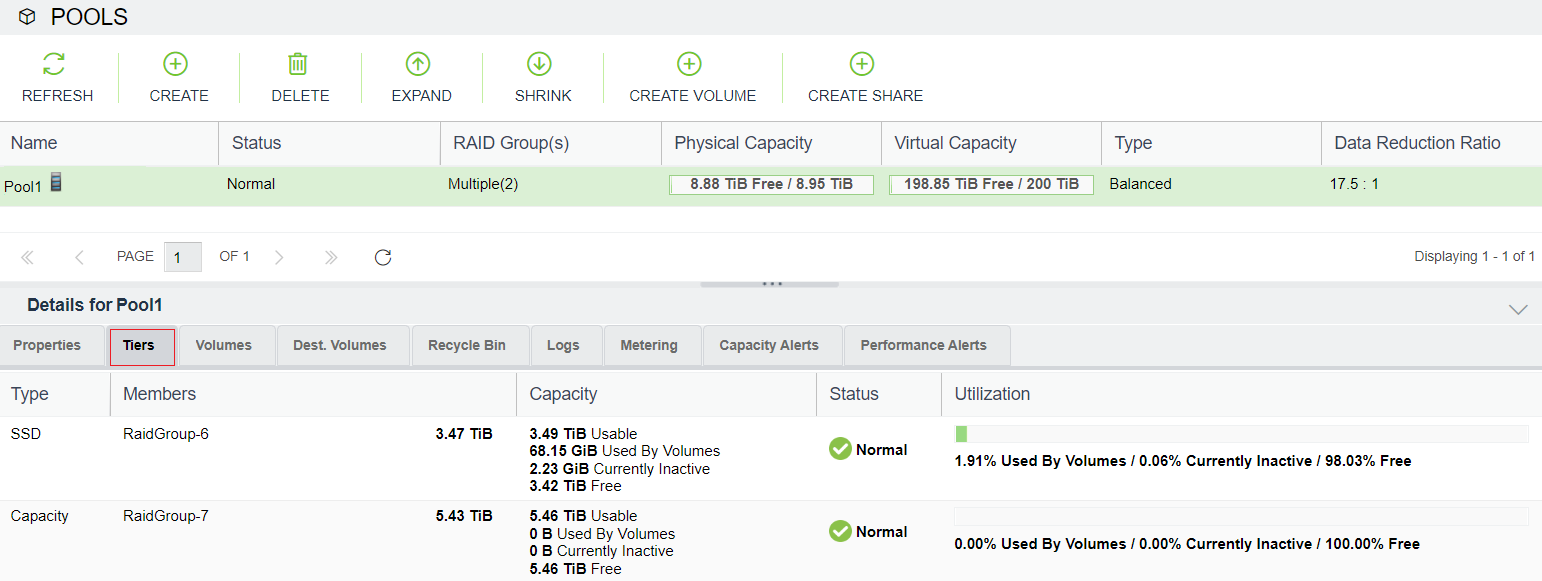

Tiers tab

Scope: VPSA Flash Array

The Tiers tab displays details of the tier types allocated to the selected Pool.

Each tier type includes the following information:

Type of tier.

Members: RAID Groups or Drives and their capacities, that are members of each tier.

Capacity: Measurements in units for Usable capacity, Used By Volumes, Currently Inactive, and Free.

Status: Operational status of the tier.

Utilization: Progress bar and measurements in percentages: Used By Volumes, Currently Inactive, and Free.

Volumes and Dest Volumes tabs

These two tabs display the provisioned Volumes and the Provisioned Remote Mirroring Destination Volumes. Please note that the Dest Volumes are not displayed in the main page, since most operations are not applicable to them. Displaying the list of the Dest Volumes in the Pools South Panel provides a complete picture of the Objects that consume capacity from the Pool.

The Volumes tab displays the following information:

Name

Capacity (virtual, not provisioned)

Status

Data Type (Block or File-System)

The Dest Volumes tab displays the following information:

Name

Capacity (virtual, not provisioned)

Data Type (Block or File-System)

Mapped Capacity

Data Copies Capacity

Recycle Bin tab

By default when you delete a Volume it moves to a Pool’s Recycle Bin for 7 days until it is permanently deleted. From the Recycle Bin, an administrator can purge (permanently delete) or restore a Volume.

A Volume in the Recycle Bin tab has the same details that were displayed for it in the Volumes tab, before deletion:

Name

Capacity (virtual, not provisioned)

Status

Data Type (Block or File-System)

Logs tab

The Logs tab displays all event logs associated with this Pool:

Type of log entry, for example: Information, Warning, or Error.

Title - details of the logged event.

Time - the date and time of the logged event.

Metering tab

The Metering Charts provide live metering of the IO workload associated with the selected Pool.

The charts display the metering data as it was captured in the past 20 “intervals”. An interval length can be set to one of the following: 1 Second, 10 seconds, 1 Minute, 10 Minutes, or 1 Hour. The Auto button lets you see continuously-updating live metering info (refreshed every 3 seconds).

Pool Metering includes the following charts:

Chart |

Description |

|---|---|

IOPS |

The number of read and write SCSI commands issued to the Pool, per second. |

Bandwidth (MB/s) |

Total throughput (in MB) of read and write SCSI commands issued to the Pool, per second. |

IO Time (ms) |

Average response time of all read and write SCSI commands issued to the Pool, per selected interval . |

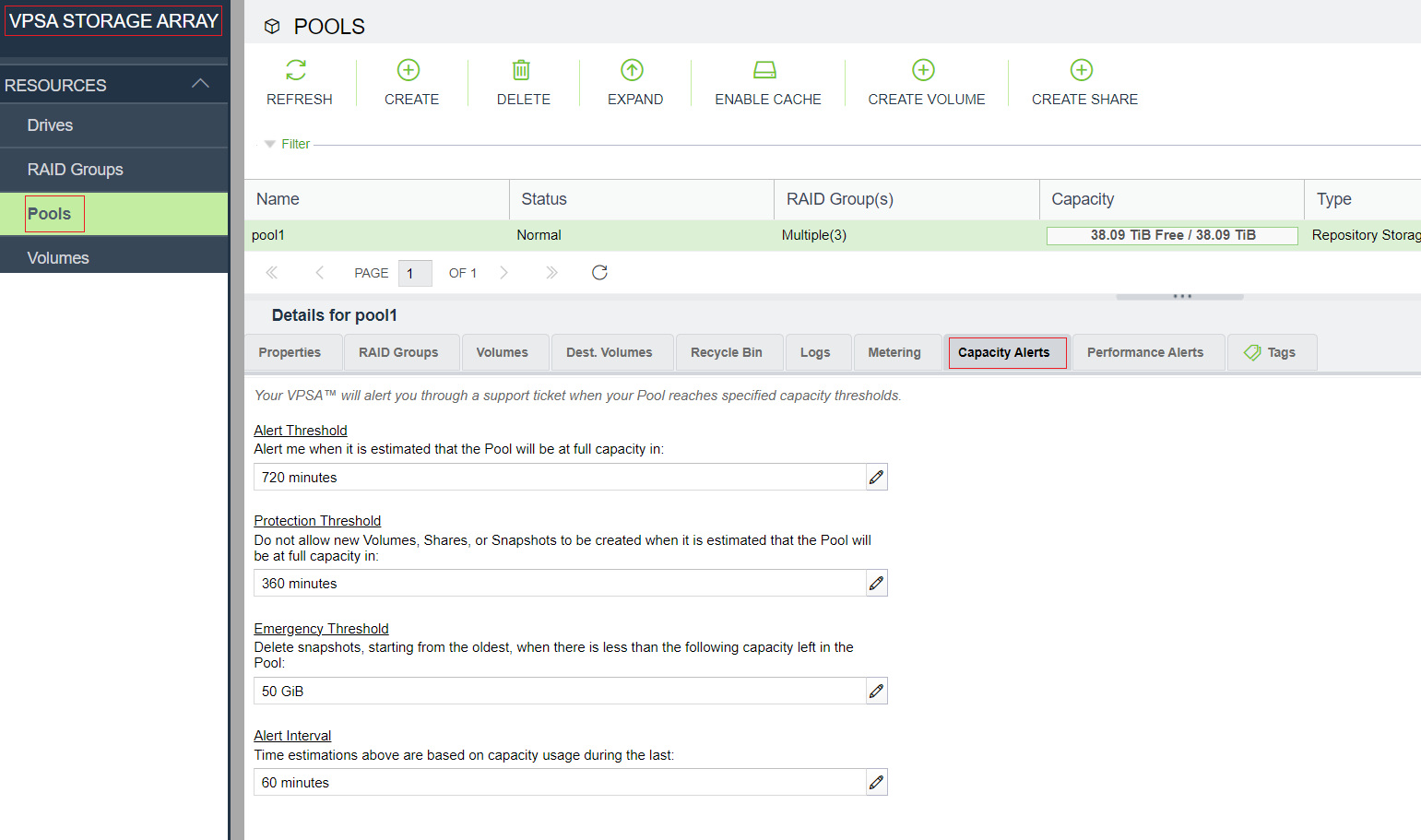

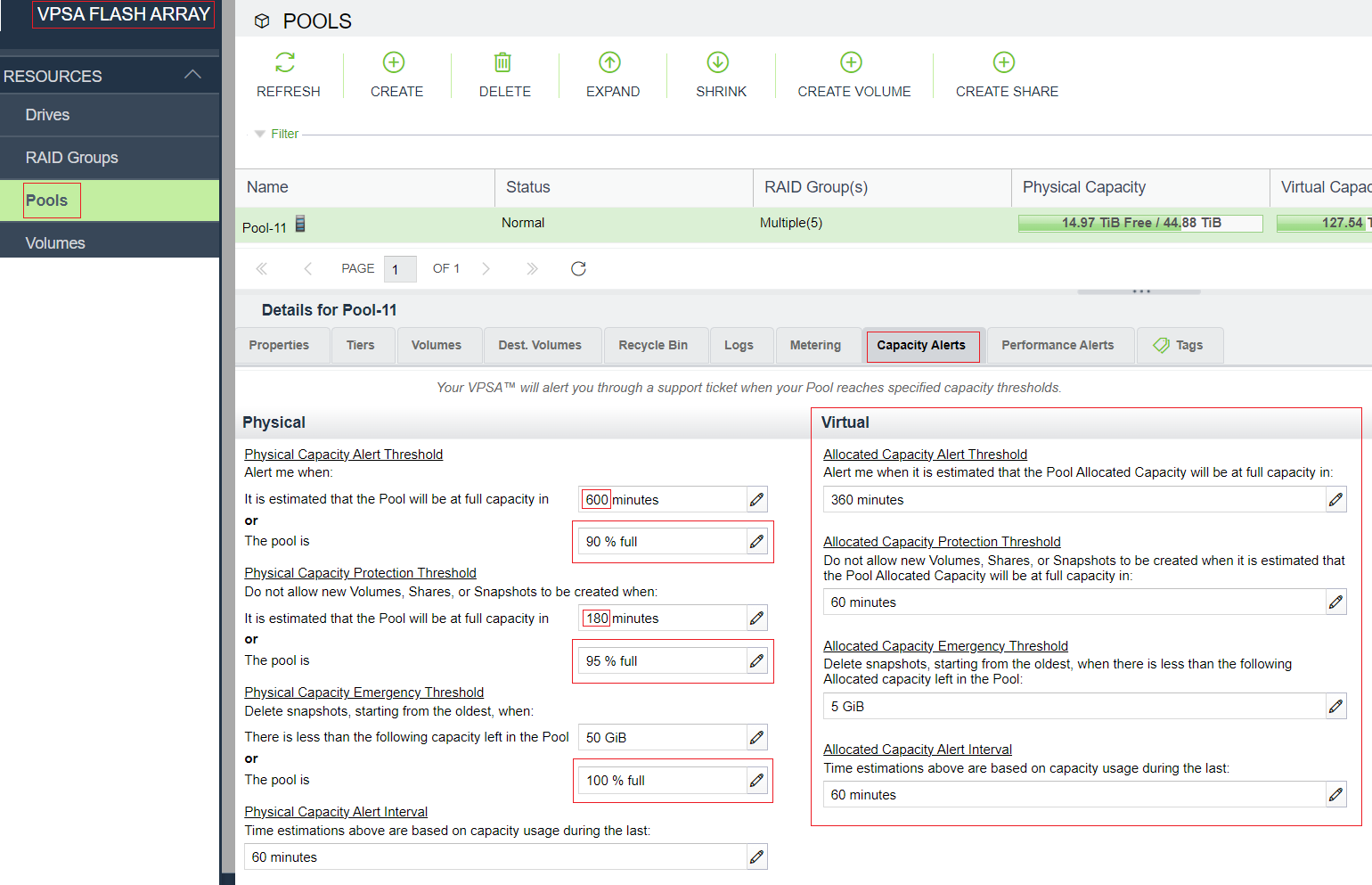

Capacity Alerts tab

The Capacity Alerts tab lists the configurable attributes of the Pool Protection Mechanism.

VPSA Storage Array capacity alerts:

VPSA Flash Array capacity alerts, and where they differ:

Note

Note the differences between the capacity alert options available for VPSA Storage Array and VPSA Flash Array

See Managing Pool Capacity Alerts for detailed information on capcity alert comparisons and configuration options.

Performance Alerts tab

The Performance Alerts tab lists the Pool’s configurations for sending alerts when performance drops below expectations. See Managing Pool Performance Alerts for more details.

Tags tab

Predefined custom tags can be configured in the Tags tab. An example use case for tags is Filtering the List of Pools in the center pane.

A tag is identified by its Tag Name and has a Tag Value associated with it. A tag can be defined only once for a Pool. However, the same Tag Name can be defined with a different Tag Value for other Pools.

Create: To create a new tag for a Pool, in the Pool’s Tags tab click Create, and enter the Tag Name and Tag Value. The tag is added to the list of tags in the Tags tab.

Edit: To change the Tag Value of an existing tag, click on that tab in the tags list to mark it, and then click Edit. The Edit Tag dialog box opens, allowing overwriting of the Tag Value.

Note

Only the Tag Value can be edited. A tag cannot be renamed. It must be deleted, and a tag with the new name configured in its place.

Delete: To delete a tag, click on that tag row in the tags list to mark it, and then click Delete. A confirmation dialog box opens.

Refresh: Displays the updated tags list.

Managing Pool Capacity Alerts

The VPSA’s efficient and sophisticated storage provisioning infrastructure maximizes storage utilization, while providing key enterprise-grade data management functions. As a result, you can quite easily over-provision a Pool with Volumes, Snapshots and Clones, hence requiring a Pool Protection Mechanism to alert and protect when free Pool space is low.

The VPSA Pool Protection Mechanism is either time-based or capacity consumption based. The goal is to provide you sufficient time to fix the low free space situation by either deleting unused Volumes/Snapshots/Clones or by expanding the Pool’s available capacity (a very simple and quick process due to the elasticity of the VPSA and the Zadara Storage Cloud).

The VPSA measures the rate at which the Pool’s free space is consumed and calculates the estimated time left before running out of free space.

User-configurable parameters impact alerts and operations that are performed as part of the Pool Protection mechanism. Capcity alerts can be viewed and configured in the Pool’s south pane Capacity Alerts tab.

The following table lists the default values of the configurable capacity alert parameters for the VPSA Storage Array compared with those of the VPSA Flash Array:

Type |

Alert Parameter Name |

Alert Measurement |

VPSA Storage Array |

VPSA Flash Array |

|---|---|---|---|---|

Physical |

Capacity Alert Threshold |

Estimated remaining time to full capacity |

360 minutes |

600 minutes |

Percentage of used capacity |

✗ |

90% full |

||

Physical |

Capacity Protection Threshold |

Estimated remaining time to full capacity |

60 minutes |

180 minutes |

Percentage of used capacity |

✗ |

95% full |

||

Physical |

Capacity Emergency Threshold |

Free capacity less than |

50 GiB |

50 GiB |

Percentage of used capacity |

✗ |

99% full |

||

Physical |

Capacity Alert Interval |

Timeframe to calculate consumption rate |

60 minutes |

60 minutes |

Virtual |

Allocated Capacity Alert Threshold |

Estimated remaining time to full address space |

✗ |

360 minutes |

Virtual |

Allocated Capacity Protection Threshold |

Estimated remaining time to full address space |

✗ |

60 minutes |

Virtual |

Allocated Capacity Emergency Threshold |

Free address space less than |

✗ |

5 GiB |

Virtual |

Allocated Capacity Alert Interval |

Timeframe to calculate consumption rate |

✗ |

60 minutes |

VPSA Storage Array and VPSA Flash Array physical alerts

Scope: VPSA Storage Array and VPSA Flash Array

Labels indicate where there are differences between the default values for VPSA Storage Array and VPSA Flash Array configurations of the capacity alert parameters, and for parameters available only in VPSA Flash Array implementations.

Physical Capacity Alert Threshold

“Alert me when it is estimated that the Pool will be at full physical capacity in X Minutes.”

The estimated time (in minutes) before running out of free space. When triggered, an online support ticket is submitted and an email is sent to the VPSA user. When crossing this threshold the Free Capacity State changes to “Alert” and the available capacity will be shown in Yellow. A secondary “reminder” ticket and an email will be generated when only half of this threshold’s estimated time is left.

Estimated time to reach full capacity.

Default:

360 minutes (6 hours) VPSA Storage Array

600 minutes (10 hours) VPSA Flash Array

Minimum: 1 minute (0 means disable this time-based alert)

Used capacity percentage (for VPSA Flash Array only):

In addition to the time calculation, a VPSA Flash Array can also track the Pool’s used capacity percentage, and issues an alert on reaching either the time or percentage threshold, whichever is first.

Default Percentage: 90% full

Minimum: 1 % (0 means disable this percentage-based alert)

Physical Capacity Protection Threshold

“Do not allow new Volumes, Shares, or Snapshots to be created when it is estimated that the Pool will be at full physical capacity in X Minutes.”

The estimated time (in minutes) before running out of free space. When triggered, the VPSA starts blocking the creation of new Volumes, Snapshots and Clones in that Pool. A support ticket and email are also generated. When crossing this threshold, the Free Capacity State changes to “Protect” and the available capacity will be shown in Red.

Estimated time to reach full capacity.

Default:

60 minutes (1 hour) VPSA Storage Array

180 minutes (3 hours) VPSA Flash Array

Minimum: 1 minute (0 means disable this time-based alert)

Used capacity percentage (for VPSA Flash Array only):

In addition to the time calculation, a VPSA Flash Array can also track the Pool’s used capacity percentage, and issues an alert on reaching either the time or percentage threshold, whichever is first.

Default Percentage: 95% full

Minimum: 1 % (0 means disable this percentage-based alert)

Physical Capacity Emergency Threshold

“Delete Snapshots, starting from the oldest, when there is less than the following capacity left in the Pool.”

When the Pool’s free capacity drops below this fixed threshold (in GiB), the VPSA starts freeing Pool capacity by deleting older Snapshots. The VPSA will delete one Snapshot at a time, starting with the oldest Snapshot, until it exceeds the Emergency threshold (i.e. when free capacity is greater than the threshold). A support ticket and email are also generated. When this threshold is crossed the Free Capacity State changes to “Emergency” and the available capacity will be shown in Red.

Free capacity less than:

Default: 50 GiB

Minimum: 1 GiB

Used capacity percentage (for VPSA Flash Array only):

In addition to the free capacity amount, a VPSA Flash Array can also track the Pool’s used capacity percentage. On reaching either the capacity amount or percentage threshold, whichever is first, the VPSA issues an alert and proceeds with snapshot deletion until free capacity drops below the Emergency threshold.

Default Percentage: 99% full

Minimum: 1 % (0 means disable this percentage-based alert)

Physical Capacity Alert Interval

“Time estimations above are based on capacity usage during the last X minutes.”

The size of the window (in minutes) that is used to calculate the rate at which free space is consumed. The smaller the window is, the more this rate is impacted by intermediate changes in capacity allocations, which can result from changes in workload characteristics and/or the creation/deletion of new Snapshots and Clones.

Default: 60 minutes (1 hour)

Minimum: 1 minute

VPSA Flash Array allocated capacity alerts

Scope: VPSA Flash Array

In addition to the physical capacity alerts, the VPSA Flash Array also provides configurable thresholds to alert in cases where the Pool allocation (virtual address space) is near capacity.

Free Address Space = Allocation Limit – Allocated Capacity

The following user-configurable parameters impact alerts and operations that are performed as part of the VPSA Flash Array Pool Protection mechanism:

Allocated Capacity Alert Threshold

“Alert me when it is estimated that the Pool’s address space will be at full capacity in X Minutes.”

The estimated time (in minutes) before running out of free address space. When triggered an online support ticket is submitted and an email is sent to the VPSA user. When crossing this threshold the Allocated Capacity Alert Mode changes to “Alert” and the available address sace will be shown in Yellow. A secondary “reminder” ticket and an email will be generated when only half of this threshold’s estimated time is left.

Default: 360 minutes (6 hours)

Minimum: 1 minute (0 means disable this alert)

Allocated Capacity Protection Threshold

“Do not allow new Volumes, Shares, or Snapshots to be created when it is estimated that the Pool’s address space will be at full capacity in X Minutes.”

The estimated time (in minutes) before running out of free address space. When triggered the VPSA starts blocking the creation of new Volumes, Snapshots and Clones in that Pool. A support ticket and email are also generated. When crossing this threshold, the Allocated Capacity Alert Mode changes to “Protect” and the available address space will be shown in Red.

Default: 60 minutes (1 hour)

Minimum: 1 minute (0 means disable this alert)

Allocated Capacity Emergency Threshold

“Delete Snapshots, starting from the oldest, when there is less than the following free address space left in the Pool.”

When the Pool’s free address space drops below this fixed threshold (in GiB), the VPSA starts freeing Pool capacity by deleting older Snapshots. The VPSA will delete one Snapshot at a time, starting with the oldest Snapshot, until it exceeds the Emergency threshold (i.e. when free address space is greater than the threshold). A support ticket and email are also generated. When this threshold is crossed the Free Capacity State changes to “Emergency” and the available address space will be shown in Red.

Default: 5 GiB

Minimum: 1 GiB

Allocated Capacity Alert Interval

“Calculate the estimated time until the Pool’s address space is full based on new capacity usage in the previous X minutes.”

The size of the window (in minutes) that is used to calculate the rate at which free address space is consumed. The smaller the window is the more this rate is impacted by intermediate changes in capacity allocations, which can result from changes in workload characteristics and/or the creation/deletion of new Snapshots and Clones.

Default: 60 minutes (1 hours)

Minimum: 1 minute

Managing Pool Performance Alerts

A VPSA administrator has the option to set Pool Performance Alerts in addition to the default Pool Capacity Alerts. Performance Alerts are available for:

Read IOPS Limit – Creates an alert when the average read IOPS, during the past minute, for a Pool exceeds a user-specified threshold.

Read Throughput Limit - Creates an alert when, during the past minute, the average read MB/s for a Pool exceeds a user-specified threshold.

Read Latency Limit – Creates an alert when, during the past minute, the average read latency for a Pool exceeds a user-specified threshold.

Write IOPS Limit – Creates an alert when, during the past minute, the average write IOPS for a Pool exceeds a user-specified threshold.

Write Throughput Limit - Creates an alert when, during the past minute, the average write MB/s for a Pool exceeds a user-specified threshold.

Write Latency Limit – Creates an alert when, during the past minute, the average write latency for a Pool exceeds a user-specified threshold.

Deleting a Pool

The VPSA administrator can delete delete a specific Pool if it not needed by clicking the Delete option under the Pool top option menu.

Due to the sensitivity of the operation the system will block a delete request where the underlying entities (Volumes and Snapshots) still exist.

The VPSA has a built-in Recycle-Bin mechanism that protects against human error (enabled by default). The Pool cannot be deleted where Volumes are stored in the Recycle Bin (Pool level). If you wish to delete the Pool, ensure that all Volumes are Purged from its Recycle Bin.